Total posts:

187

10/18/2010

An inquest was opened at St Pancras Coroner’s Court on Monday, 18 October 2010, in relation to a hoard of American gold twenty-dollar coins found in the borough of Hackney, Greater London.

The inquest has been opened to determine whether the hoard qualifies as Treasure. Because the coins are less than 300 years’ old, in order to qualify as Treasure they need to meet the following criteria:

1. Made of gold or silver

2. Deliberately concealed by the owner with a view to later recovery

3. The owner, or his or her present heirs or successors, must be unknown

The inquest will be resumed and concluded at the Poplar Coroner’s Court on the 8th day of February 2011.

The coins were reported to Kate Sumnall, Finds Liaison Officer for the Portable Antiquities Scheme, based at the Museum of London. They number 80 in total and were minted in the United States between 1854 and 1913, and all are $20 denominations of the type known as ‘Double-Eagle’.

The coins, some of which are less than 100 years’ old, would not be the youngest items to fall under the definition of ‘Treasure’ or the earlier legal category of ‘Treasure Trove’ – there are cases of more recent British coins, including a hoard of silver threepences from Abbey Hulton, Staffordshire dating to 1943 which were declared Treasure Trove. However, this find is totally unprecedented in the United Kingdom. The value of the coins at the time of their deposit would have been very substantial; this coupled with the fact that the coins are a specific type of foreign currency, points to a compelling story behind their collection and concealment.

As the coins are composed predominantly of precious metal, they will qualify as ‘Treasure’ under the terms of the Treasure Act 1996 and thus become the property of the Crown, if the coroner finds that they have been buried with the intent of future recovery. However if the original owner or his or her heirs are able to establish their title to the coins, this will override the Crown’s claim.

The coroner has suspended the inquest until 8 February 2011 order to allow possible claimants to come forward. Anyone with any information about the original owners of the coins, their heirs or successors, should provide this to me, the Treasure Registrar, at the British Museum. Claims should be submitted before the coroner concludes the inquest. We will require evidence about how, when, where and why they were concealed and evidence upon which the Museum can be sure of the ownership by any potential claimant. Our office will work in cooperation with the Coroner and with the Department for Culture, Media and Sport, on whose behalf we perform the administration of Treasure cases, to evaluate claims of ownership.

There is no penalty for mistaken claims made in good faith but any false claims may be reported to the police for consideration of charges of perverting the course of justice, or other offences of dishonesty.

If no valid claim is made for the coins, and the Coroner finds them to be Treasure, the coins would then be valued by the Treasure Valuation Committee at their full market value. Hackney Museum has expressed an interest in acquiring the coins, so upon agreement of the valuation, it would have up to four months to raise the money to pay for the hoard, and this sum would be divided between the owner of the land and the finder.

The Treasure Act 1996 established a set of criteria that archaeological finds have to meet in order to be classed as ‘Treasure’, among which is a requirement that the item be over 300 years old at the time of recovery. For instance, finds made in 2010 would normally have to date from 1710 or earlier to qualify. But the Treasure Act also made provision for any finds that would have qualified as ‘Treasure Trove’ under the old legislation (items of precious metal, buried with the intent of future recovery, whose owners could not be traced) but which fail the other tests to nevertheless be classed as ‘Treasure’. This is one such circumstance, and the first in recent years which has not been disclaimed by the Crown.

Dr Barrie Cook of the British Museum (Department of Coins and Medals) stated in his report to the coroner:

The 80 coins are all gold 20-dollar pieces of the United States, issued between 1854 and 1913. The coins are thus all the same denomination, introduced in this form in 1850, and were struck to the same standard, 90% gold, used from 1837 until the end of US gold coinage in 1933. The catalogue shows that the coins gradually increase in number across the decades from 1870 to 1909 (13 coins from 1870-9; 14 from 1880-89; 18 from 1890-99; and 25 from 1900-9). Over a quarter of the total were issued in the last 6 six years represented. Together these factors suggest that the material began to be put aside during this later period, rather than being built up systematically across a range of time represented. The main element among this latest material are the 17 coins dating to 1908, which suggests that a single batch of coins from that year might have formed the core for the group.

A catalogue of the hoard runs as follows:

| Date |

Mint |

Total number in find |

| 1854 |

San Francisco |

1 |

| 1867 |

San Francisco |

1 |

| 1870 |

San Francisco |

1 |

| 1875 |

Carson City |

1 |

| 1875 |

San Francisco |

1 |

| 1876 |

San Francisco |

5 |

| 1876 |

Philadelphia |

2 |

| 1877 |

San Francisco |

2 |

| 1877 |

Philadelphia |

1 |

| 1881 |

San Francisco |

1 |

| 1882 |

San Francisco |

2 |

| 1883 |

San Francisco |

3 |

| 1884 |

San Francisco |

2 |

| 1885 |

San Francisco |

1 |

| 1888 |

San Francisco |

4 |

| 1889 |

San Francisco |

1 |

| 1890 |

Philadelphia |

1 |

| 1891 |

San Francisco |

1 |

| 1893 |

San Francisco |

1 |

| 1894 |

San Francisco |

4 |

| 1896 |

San Francisco |

3 |

| 1898 |

San Francisco |

4 |

| 1899 |

San Francisco |

4 |

| 1900 |

San Francisco |

2 |

| 1901 |

San Francisco |

3 |

| 1902 |

San Francisco |

2 |

| 1905 |

San Francisco |

2 |

| 1907 |

Philadelphia |

1 |

| 1908 |

Philadelphia |

17 |

| 1909 |

Philadelphia |

1 |

| 1910 |

Philadelphia |

1 |

| 1913 |

Philadelphia |

3 |

| 1913 |

Denver |

1 |

The images above are copyright the Museum of London.

Ian Richardson, Treasure Registrar, British Museum, London WC1B 3DG, tel.: 020 7323 8546, e-mail: treasure@britishmuseum.org

Kate Sumnall, Finds Liaison Officer & Community Archaeologist, Department of Archaeological Collections and Archive, Museum of London, 150 London Wall, London EC2Y 5HN; tel.: 020 7814 5733; e-mail: ksumnall@museumoflondon.org.uk

09/30/2010

This post discusses how I’ve been using various geodata tools (principally Yahoo!, but also Flickr shapefiles, Google’s maps and geocoder apis, Geonames, OSdata and I’m now exploring the Unlock project from Edina to see what they can offer as well), for the enrichment of our database. I started writing this post back in May, but as I’ve just spoken at the W3G unconference in Stratford-on-Avon, I thought I’d finish it and get it out. Gary Gale and his helpers produced a very good un-conference, at which I met some very interesting people (shame TW Bell couldn’t come!) and saw some good examples of what other people are up to.

My presentation from that conference is embedded here:

Most of us realise the power of maps and I’ve made them a very central cog of the new Scheme website that we soft launched at the end of March 2010. Hopefully this isn’t too long and boring and has some technical stuff that may be of some use to others. As always, the below is CC-NC-SA.

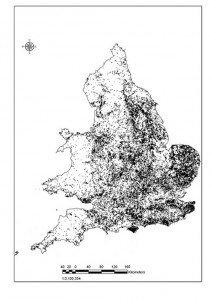

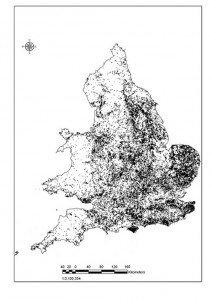

At the Scheme, we’ve been collecting data on the provenance of archaeological discoveries made by the public and publishing it online for 13 years now (much longer than I’ve worked for the Scheme!), and these are collated on our database and provide the basis for spatial interrogation of where and when these objects have been discovered. Many researchers are using the database for a variety of geomatics, for example patterning, cluster analysis etc. A few of our recent AHRC funded PhD candidates have been implementing GIS techniques as one of the integral parts of their research – for example:

At the Scheme, we’ve been collecting data on the provenance of archaeological discoveries made by the public and publishing it online for 13 years now (much longer than I’ve worked for the Scheme!), and these are collated on our database and provide the basis for spatial interrogation of where and when these objects have been discovered. Many researchers are using the database for a variety of geomatics, for example patterning, cluster analysis etc. A few of our recent AHRC funded PhD candidates have been implementing GIS techniques as one of the integral parts of their research – for example:

- Tom Brindle, KCL

- Philippa Walton, UCL

- Ian Leins, Newcastle University

- Katie Robbins, Southampton University

They are all incidentally alumni of the Scheme (and one has rejoined recently!), and have been inspired with their research from working on these data that we collate. I won’t be discussing the philosophical arguments of provenance and its meaning within this article, but demonstrating how we’re using third-party tools to enhance find spot data on our site and talk about some of the problems we face to make use of them. Some of the post will have some code examples, but you can gloss over them if you’re not into that (which the majority of our readers will probably be!)

Find spot data

Our Find Liaison Officers record the majority of objects that we are shown onto our database and ask the finder to provide us with the most accurate National Grid Reference (OSGB36) that they can produce. Many of our finders are now using GPS units to produce grid references (we’re aware of the degree of accuracy/precision they provide, but as most objects aren’t from secure archaeological contexts, the variance won’t affect work that much.) We encourage people to provide these figures to a level of 8 figures and above and this proportion is growing every year. The list below shows the precision of each grid reference length:

- 0 figure [SP] = 100 kilometre square

- 2 figure [SP11] = 10 kilometre square

- 4 figure [SP1212] = 1 kilometre square

- 6 figure [SP8123123] = 100 metre square

Then we get the figures that are actually of some archaeological use:

- 8 figure [SP812341234] = 10 metre square

- 10 figure [SP1234512345] = 1 metre square

- 12 figure [SP123456123456] = 10 centimetre square

This find spot data is given to us in confidence by the finders and landowners and we therefore have to protect this confidence. We have an agreement with the main providers of our data – the metal detecting community – representative body (The National Council for Metal Detecting), that we won’t publish on-line find spots at a precision higher than a 4 figure national grid reference or to parish level. These grid references can be obscured from public view completely by asking the Finds Liaison Officer to enter a to be “known as” alias on the find spot form at the time of recording (or subsequently).

Converting OSGB36 grid references to Latitude and Longitude pairs

Most of the web mapping programs out there, make use of Latitude and Longitude pairs for displaying point data on their mapping interfaces. Therefore, we now convert all our NGRs to LatLng on our database and these are stored as floats in two columns in our find spots table. Whilst processing these grid references to the LatLng pairs, I also do some further manipulations to produce and insert into our spatial data table:

- Grid reference length

- Accuracy of grid reference as shown above

- Four figure grid reference

- 1:25k map reference

- 1:10k map reference

- Findspot elevation

- Where on Earth ID

The PHP code functions to do this are based around some written by the original Oxford ArchDigital team and has some additions by me. There are publicly available code examples by Barry Hunter or Jeff Stott or some other versions out there on the web! My code is used as either a service or view helper in my Zend Framework project and bundles together a variety of functions. I’m not a developer, I’ve just taught myself bits and pieces to get the PAS website back on the road; if you see errors, do let me know or suggest ways to improve the code.

Using Yahoo! to geo-enrich our data

Several years ago, the great Tyler Bell (formerly of Oxford ArchDigital and Yahoo!) gave a paper at an archaeological computing conference at UCL’s Institute of Archaeology, where he broke his joke about XML being like high school sex (won’t elaborate on this, ask him) whilst some toe-rags were trying to steal my push bike (they came off badly as I was standing behind them!) Tyler’s paper gave me much food for thought, and it is over the last year or so, that this idea has really come to fruition with our data. The advent of Yahoo!’s suite of geoPlanet tools has allowed us to do various things to our data set and present it in different ways. Below, I’ll show you some of things their powerful suite of tools has allowed us to do.

Putting dots on maps for finds with just a parish name

Prior to 2003, we often received the majority of our finds with a very vague find spot, often just to parish level. As everyone loves maps and would like to see where these finds came from, I wanted to get a map on every page that needed one.. Previously, our FLOs would be asked to centre a find on the parish for these find spots; this is now such a waste of time when you can use geoPlanet to get a latitude and longitude, Postcode, type of settlement, bounding box and a WOEID to enhance the data that we hold. To do this is pretty simple with the aid of YQL.

I first heard about YQL from Jim O’Donnell (formerly NMM’s web wizard) and then more at the Yahoo! Hack day in London, when I pedalled round London with Andrew Larcombe on the Purple Pedals bikes. Yahoo describe YQL as SELECT * FROM Internet, which is indeed pretty true. Building opentables to use with their system is pretty easy – I’ll write more about some Museum tables in another post soon. So all my geo extraction is performed using YQL and the examples below show how. All of these are done with the public endpoint. If you run a high traffic site, it is definitely worth changing your code to use Oauth and authenticate your YQL calls for the non-public endpoint (better rate limits etc). It is slightly tricky and you do need to work out how to refresh your Yahoo token, but it is worth the effort.

For example, I grew up in Stapleford, Cambridgeshire and you can search for that with the following YQL call:

select \* from geo.places where text="stapleford,cambridgeshire"

Which maps to this REST URL of:

[http://query.yahooapis.com/v1/public/yql?q=select%20\*%20from%20geo.places%20where%20text%3D%22stapleford%2Ccambridgeshire%22](http://query.yahooapis.com/v1/public/yql?q=select%20*%20from%20geo.places%20where%20text%3D%22stapleford%2Ccambridgeshire%22 "REST call to geoplanet for Stapleford") producing an XML or JSON response like below (diagnostics omitted):

<?xml version="1.0" encoding="UTF-8"?>

<query xmlns:yahoo="http://www.yahooapis.com/v1/base.rng" yahoo:count="1" yahoo:created="2010-05-05T11:12:49Z" yahoo:lang="en-US">

<results>

<place xmlns="http://where.yahooapis.com/v1/schema.rng" xml:lang="en-US" yahoo:URI\="http://where.yahooapis.com/v1/place/35984">

<woeid>35984</woeid>

<placeTypeName code="7">Town</placeTypeName>

<name>Stapleford</name>

<country code="GB" type="Country">United Kingdom</country>

<admin1 code="GB\-ENG" type="Country">England</admin1>

<admin2 code="GB\-CAM" type="County">Cambridgeshire</admin2>

<admin3/>

<locality1 type="Town">Stapleford</locality1>

<locality2/>

<postal type="Postal Code">CB22 5</postal>

<centroid>

<latitude>52.145329</latitude>

<longitude>0.151490</longitude>

</centroid>

<boundingBox>

<southWest>

<latitude>52.127220</latitude>

<longitude>0.133460</longitude>

</southWest>

<northEast>

<latitude>52.164879</latitude>

<longitude>0.176640</longitude>

</northEast>

</boundingBox>

<areaRank>3</areaRank>

<popRank>1</popRank>

</place>

</results>

</query>

[/xml]

By parsing the XML or JSON response (I tend to use the JSON response), a Latitude and Longitude pair can be retrieved for placing the object onto the map. It isn’t the true find spot, but can at least give a high level overview of the point of origin. Whilst doing this, I also take the postcode, woeid, bounding box etc to reuse again. Parsing data is pretty simple once you have got your response and decoded the JSON, for example:

$place = $place->query->results->place;

$placeData = array();

$placeData['woeid'] = (string) $place->woeid;

$placeData['placeTypeName'] = (string) $place->placeTypeName->content;

$placeData['name'] = (string) $place->name;

if($place->country){

$placeData['country'] = (string) $place->country->content;

}

if($place->admin1) {

$placeData['admin1'] = (string) $place->admin1->content;

}

if($place->admin2){

$placeData['admin2'] = (string) $place->admin2->content;

}

if($place->admin3){

$placeData['admin3'] = (string) $place->admin3->content;

}

if($place->locality1){

$placeData['locality1'] = (string) $place->locality1->content;

}

if($place->locality2){

$placeData['locality2'] = (string) $place->locality2->content;

}

if($place->postal){

$placeData['postal'] = $place->postal->content;

}

$placeData['latitude'] = $place->centroid->latitude;

$placeData['longitude'] = $place->centroid->longitude;

$placeData['centroid'] = array(

‘lat’ => (string) $place->centroid->latitude,

‘lng’ => (string) $place->centroid->longitude

);

$placeData['boundingBox'] = array(‘southWest’ => array(

‘lat’ => (string) $place->boundingBox->southWest->latitude,

‘lng’ => (string) $place->boundingBox->southWest->longitude),

‘northEast’ => array(

‘lat’ => (string) $place->boundingBox->northEast->latitude,

‘lng’ => (string) $place->boundingBox->northEast->longitude)

);

return $placeData;

The image below shows an autogenerated findspot and a parish boundary (see below for flickr shapefile use) and adjacent places.

Within our database, I have a certainty field for where the co-ordinates originate from. This table has the following content:

- From a map

- From finder verbally

- GPS from the finder

- GPS from the FLO

- Centred on the parish via a paper map

- Recorded at a rally (so certainty could be dubious)

- Produced via webservice

Therefore researchers are appraised of where the findspot comes from and whether we can treat it (if at all) as useful.

Getting elevation (via Geonames)

The woeid or the LatLng can be used to get elevation of the find spot. This can be achieved by a combination of reverse geocoding against Flickr place names (for woeid) and the Geonames API call for ‘Elevation – Aster Global Digital Elevation Model’. So for example, I want to get the elevation for the centre of Stapleford. You can query the geonames API with the following YQL:

select \* from json where URL\="http://ws.geonames.org/astergdemJSON?lat=52.145329&lng=0.151490";

Which when executed produces this response:

<?xml version="1.0" encoding="UTF-8"?>

<query xmlns:yahoo="http://www.yahooapis.com/v1/base.rng"

yahoo:count="1" yahoo:created="2010-09-30T12:26:00Z" yahoo:lang="en-US">

<results>

<json>

<astergdem>17</astergdem>

<lng>0.15149</lng>

<lat>52.145329</lat>

</json>

</results>

</query>

So I now have the elevation of 17 metres above sea level. Great! I’ve been experimenting a bit with this against some findspots that we know elevation for. One high profile object, the Crosby Garrett Helmet was pinpointed to 1 metre difference in the GPS elevation and the Geonames sourced one.

By providing an elevation for each of our findspots, researchers can then do viewshed analysis; I don’t think anyone has really done this yet for the artefact distributions that we record, but I could be proved wrong!

Reverse geocoding from Latitude and Longitude with Yahoo!

At present, the GeoPlanet suite doesn’t provide this feature, but you can still manage to do this via YQL and using the following query:

select * from flickr.places where lon={Longitude} and lat={latitude}

So for example using Stapleford’s LatLng as the YQL parameters gives you:

<?xml version="1.0" encoding="UTF-8"?>

<query xmlns:yahoo="http://www.yahooapis.com/v1/base.rng" yahoo:count="1" yahoo:created="2010-05-05T01:27:47Z" yahoo:lang="en-US">

<results>

<places accuracy="16" latitude="52.145329" longitude="0.151490" total="1">

<place latitude="52.145" longitude="0.151" name="Stapleford, England, United Kingdom" place_id="m2G8tyiaBJVjFQ" place_type="locality" place_type_id="7" place_url="/United+Kingdom/England/Stapleford/in-Cambridgeshire" timezone="Europe/London" woeid="35984"/>

</places>

</results>

</query>

You’ll notice a couple fo useful things in the Flickr XML returned, for example the place_url, in this case: /united+kingdom/england/stapleford/in-cambridgeshire which when appended to Flickr’s root URL for photos can give you http://www.flickr.com/places/united+kingdom/england/stapleford/in-cambridgeshire which in turn gives you access to feeds in various flavours from that page.

One of the other cool things available in Flickr’s API is placeinfo. I’d love a boundary map of how Flickr views the parish of Stapleford. As I previously obtained and gave my findspot a WOEID, I can see if Flickr has this data. So perform this YQL query:

select * from flickr.places.info where woe_id=’35984′

And execute it to obtain the following XML:

<?xml version="1.0" encoding="UTF-8"?>

<query xmlns:yahoo="http://www.yahooapis.com/v1/base.rng"

yahoo:count="1" yahoo:created="2010-09-30T12:34:50Z" yahoo:lang="en-US">

<results>

<place has_shapedata="1" latitude="52.145" longitude="0.151"

name="Stapleford, England, United Kingdom"

place_id="m2G8tyiaBJVjFQ" place_type="locality"

place_type_id="7"

place_url="/United+Kingdom/England/Stapleford/in-Cambridgeshire"

timezone="Europe/London" woeid="35984">

<locality latitude="52.145" longitude="0.151"

place_id="m2G8tyiaBJVjFQ"

place_url="/United+Kingdom/England/Stapleford/in-Cambridgeshire" woeid="35984">Stapleford, England, United Kingdom</locality>

<county latitude="52.373" longitude="0.007"

place_id="pVJUVwKYA5qQZa9wqQ"

place_url="/pVJUVwKYA5qQZa9wqQ" woeid="12602140">Cambridgeshire, England, United Kingdom</county>

<region latitude="52.883" longitude="-1.974"

place_id="pn4MsiGbBZlXeplyXg"

place_url="/United+Kingdom/England" woeid="24554868">England, United Kingdom</region>

<country latitude="54.314" longitude="-2.230"

place_id="DevLebebApj4RVbtaQ"

place_url="/United+Kingdom" woeid="23424975">United Kingdom</country>

<shapedata alpha="0.00015" count_edges="16"

count_points="44" created="1248244568" has_donuthole="0" is_donuthole="0">

<polylines>

<polyline>52.155731201172,0.17115999758244 52.158447265625,0.17576499283314 52.159084320068,0.18161700665951 52.159244537354,0.18208900094032 52.15747833252,0.18410600721836 52.153221130371,0.18645000457764 52.151500701904,0.17897999286652 52.14905166626,0.17045900225639 52.136436462402,0.15260599553585 52.135303497314,0.14247800409794 52.140232086182,0.13955999910831 52.145477294922,0.14135999977589 52.145721435547,0.14150799810886 52.145240783691,0.14707000553608 52.154125213623,0.16043299436569 52.155731201172,0.17115999758244</polyline>

</polylines>

<URLs\>

<shapefile>http://farm4.static.flickr.com/3483/shapefiles/35984_20090722_6d95b5e27e.tar.gz</shapefile>

</urls>

</shapedata>

</place>

</results>

</query>

Brilliant, the polylines can be used draw an outline shapefile on the map.

Many of our objects are tied by descriptive prose to various places around the World. By using Yahoo’s Placemaker, we can now extract the entities from the finds data and allow for cross referencing of all objects that have Avon, England within their description. The image below shows you where you’ll see the tags displayed on the finds record, as I’m into Classics, you’ll notice I label these with lower case Greek letters for bullets. Probably pretentious! To get these tags is really very straightforward and can use another pretty simple YQL call, for example, this text is from the famous Moorlands Patera.

SELECT \* FROM geo.placemaker WHERE documentContent = "Only two other vessels with inscriptions naming forts on Hadrian’s Wall are known; the ‘Rudge Cup’ which was discovered in Wiltshire in 1725 (Horsley 1732; Henig 1995) and the ‘Amiens patera’ found in Amiens in 1949 (Heurgon 1951). Between them they name seven forts, but the Staffordshire patera is the first to include Drumburgh and is the only example to name an individual. All three are likely to be souvenirs of Hadrian’s Wall, although why they include forts on the western end of the Wall only is unclear" AND documentType="text/plain"

Which then produces this output in XML:

<?xml version="1.0" encoding="UTF-8"?>

<query xmlns:yahoo="http://www.yahooapis.com/v1/base.rng" yahoo:count="1" yahoo:created="2010-05-05T04:26:57Z" yahoo:lang="en-US">

<results>

<matches>

<match>

<place xmlns="http://wherein.yahooapis.com/v1/schema">

<woeId>575961</woeId>

<type>Town</type>

<name><![CDATA[Amiens, Picardie, FR]]></name>

<centroid>

<latitude>49.8947</latitude>

<longitude>2.29316</longitude>

</centroid>

</place>

<reference xmlns="http://wherein.yahooapis.com/v1/schema">

<woeIds>575961</woeIds>

<start>177</start>

<end>183</end>

<isPlaintextMarker>1</isPlaintextMarker>

<text><![CDATA[Amiens]]></text>

<type>plaintext</type>

<xpath><![CDATA[]]></xpath>

</reference>

<reference xmlns="http://wherein.yahooapis.com/v1/schema">

<woeIds>575961</woeIds>

<start>201</start>

<end>207</end>

<isPlaintextMarker>1</isPlaintextMarker>

<text><![CDATA[Amiens]]></text>

<type>plaintext</type>

<xpath><![CDATA[]]></xpath>

</reference>

</match>

<match>

<place xmlns="http://wherein.yahooapis.com/v1/schema">

<woeId>12602186</woeId>

<type>County</type>

<name><![CDATA[Wiltshire, England, GB]]></name>

<centroid>

<latitude>51.3241</latitude>

<longitude>-1.9257</longitude>

</centroid>

</place>

<reference xmlns="http://wherein.yahooapis.com/v1/schema">

<woeIds>12602186</woeIds>

<start>123</start>

<end>132</end>

<isPlaintextMarker>1</isPlaintextMarker>

<text><![CDATA[Wiltshire]]></text>

<type>plaintext</type>

<xpath><![CDATA[]]></xpath>

</reference>

</match>

<match>

<place xmlns="http://wherein.yahooapis.com/v1/schema">

<woeId>12602189</woeId>

<type>County</type>

<name><![CDATA[Staffordshire, England, GB]]></name>

<centroid>

<latitude>52.8248</latitude>

<longitude>-2.02817</longitude>

</centroid>

</place>

<reference xmlns="http://wherein.yahooapis.com/v1/schema">

<woeIds>12602189</woeIds>

<start>276</start>

<end>289</end>

<isPlaintextMarker>1</isPlaintextMarker>

<text><![CDATA[Staffordshire]]></text>

<type>plaintext</type>

<xpath><![CDATA[]]></xpath>

</reference>

</match>

<match>

<place xmlns="http://wherein.yahooapis.com/v1/schema">

<woeId>23509175</woeId>

<type>LandFeature</type>

<name><![CDATA[Hadrian's Wall, Bardon Mill, England, GB]]></name>

<centroid>

<latitude>54.9522</latitude>

<longitude>-2.32975</longitude>

</centroid>

</place>

<reference xmlns="http://wherein.yahooapis.com/v1/schema">

<woeIds>23509175</woeIds>

<start>418</start>

<end>432</end>

<isPlaintextMarker>1</isPlaintextMarker>

<text><![CDATA[Hadrian’s Wall]]></text>

<type>plaintext</type>

<xpath><![CDATA[]]></xpath>

</reference>

</match>

</matches>

</results>

</query>

In the above XML response, you can now see the matches that Placemaker has found in the text sent to their service. You can now parse this data and use it for tagging or any other purpose that you want to put the data to. YQL has the added benefit of caching at the Yahoo! end and you can do multiple queries in one call as demonstrated by Chris Heilmann in his Geoplanet explorer.

A YQL Multiquery example

For example, I want to combine a placemaker call and also get some spatial information for a find spot where I only have the placename. To do this, I write this YQL query:

select \* from query.multi where queries=’

select \* from geo.placemaker where documentContent = "Only two other vessels with inscriptions naming forts on Hadrian’s Wall are known the Rudge Cup which was discovered in Wiltshire in 1725 (Horsley 1732, Henig 1995) and the Amiens patera found in Amiens in 1949 (Heurgon 1951). Between them they name seven

forts, but the Staffordshire patera is the first to include Drumburgh and is the only example to name an individual. All three are likely to be souvenirs of Hadrian’s Wall, although why they include forts on the western end of the Wall only is unclear" and documentType="text/plain" and appid="";

select \* from geo.places where text="staffordshire moorlands, staffordshire,uk" ‘

The base URL to call this is the public version – http://query.yahooapis.com/v1/public/yql and as we are using one of the community tables, the call needs to be made with &env=store://datatables.org/alltableswithkeys appended (urlencoded).

This can be run in the console and produces the following XML response – 4 place matches and the geo data for Staffordshire Moorlands.

<?xml version="1.0" encoding="UTF-8"?>

<query xmlns:yahoo="http://www.yahooapis.com/v1/base.rng"

yahoo:count="2" yahoo:created="2010-09-30T11:44:08Z" yahoo:lang="en-US">

<results>

<results>

<matches>

<match>

<place xmlns="http://wherein.yahooapis.com/v1/schema">

<woeId>575961</woeId>

<type>Town</type>

<name><![CDATA[Amiens, Picardie, FR]]></name>

<centroid>

<latitude>49.8947</latitude>

<longitude>2.29316</longitude>

</centroid>

</place>

<reference xmlns="http://wherein.yahooapis.com/v1/schema">

<woeIds>575961</woeIds>

<start>196</start>

<end>202</end>

<isPlaintextMarker>1</isPlaintextMarker>

<text><![CDATA[Amiens]]></text>

<type>plaintext</type>

<xpath><![CDATA[]]></xpath>

</reference>

</match>

<match>

<place xmlns="http://wherein.yahooapis.com/v1/schema">

<woeId>12602186</woeId>

<type>County</type>

<name><![CDATA[Wiltshire, England, GB]]></name>

<centroid>

<latitude>51.3241</latitude>

<longitude>-1.9257</longitude>

</centroid>

</place>

<reference xmlns="http://wherein.yahooapis.com/v1/schema">

<woeIds>12602186</woeIds>

<start>120</start>

<end>129</end>

<isPlaintextMarker>1</isPlaintextMarker>

<text><![CDATA[Wiltshire]]></text>

<type>plaintext</type>

<xpath><![CDATA[]]></xpath>

</reference>

</match>

<match>

<place xmlns="http://wherein.yahooapis.com/v1/schema">

<woeId>12602189</woeId>

<type>County</type>

<name><![CDATA[Staffordshire, England, GB]]></name>

<centroid>

<latitude>52.8248</latitude>

<longitude>-2.02817</longitude>

</centroid>

</place>

<reference xmlns="http://wherein.yahooapis.com/v1/schema">

<woeIds>12602189</woeIds>

<start>271</start>

<end>284</end>

<isPlaintextMarker>1</isPlaintextMarker>

<text><![CDATA[Staffordshire]]></text>

<type>plaintext</type>

<xpath><![CDATA[]]></xpath>

</reference>

</match>

<match>

<place xmlns="http://wherein.yahooapis.com/v1/schema">

<woeId>23509175</woeId>

<type>LandFeature</type>

<name><![CDATA[Hadrian's Wall, Bardon Mill, England, GB]]></name>

<centroid>

<latitude>54.9522</latitude>

<longitude>-2.32975</longitude>

</centroid>

</place>

<reference xmlns="http://wherein.yahooapis.com/v1/schema">

<woeIds>23509175</woeIds>

<start>413</start>

<end>427</end>

<isPlaintextMarker>1</isPlaintextMarker>

<text><![CDATA[Hadrian’s Wall]]></text>

<type>plaintext</type>

<xpath><![CDATA[]]></xpath>

</reference>

</match>

</matches>

</results>

<results>

<place xmlns="http://where.yahooapis.com/v1/schema.rng"

xml:lang="en-US" yahoo:URI\="http://where.yahooapis.com/v1/place/12696078">

<woeid>12696078</woeid>

<placeTypeName code="10">Local Administrative Area</placeTypeName>

<name>Staffordshire Moorlands District</name>

<country code="GB" type="Country">United Kingdom</country>

<admin1 code="GB\-ENG" type="Country">England</admin1>

<admin2 code="GB\-STS" type="County">Staffordshire</admin2>

<admin3/>

<locality1/>

<locality2/>

<postal/>

<centroid>

<latitude>53.071468</latitude>

<longitude>-1.993490</longitude>

</centroid>

<boundingBox>

<southWest>

<latitude>52.916691</latitude>

<longitude>-2.211330</longitude>

</southWest>

<northEast>

<latitude>53.226250</latitude>

<longitude>-1.775660</longitude>

</northEast>

</boundingBox>

<areaRank>6</areaRank>

<popRank>0</popRank>

</place>

</results>

</results>

</query>

Concordance with other services

One of the other things I am interested in, is finding concordance between WOEID and Geonames places. This is quite easy to do using another geo table. For example look up Amiens, Picardie by WOEID:

select * from geo.concordance where namespace="woeid" and text="575961"

Produces:

<?xml version="1.0" encoding="UTF-8"?>

<query xmlns:yahoo="http://www.yahooapis.com/v1/base.rng"

yahoo:count="1" yahoo:created="2010-09-30T12:41:28Z" yahoo:lang="en-US">

<results>

<concordance xml:lang="en-US"

xmlns="http://where.yahooapis.com/v1/schema.rng"

xmlns:yahoo="http://www.yahooapis.com/v1/base.rng" yahoo:URI\="http://where.yahooapis.com/v1/concordance/woeid/575961">

<woeid>575961</woeid>

<geonames>3037854</geonames>

<locode>FRAMI</locode>

</concordance>

</results>

</query>

So you now have a WOEID and a geonames ID. Amiens WOEID = 575961 and Geonames = 3037854. You can use the geonames id that is produced for linked data; for example: http://ws.geonames.org/rdf?geonameId=3037854

Problems using YQL for geodata

Even though combining YQL with the power of Geoplanet is awesome, I did run into a few problems. None of these were really insurmountable:

- Hit rate limit constantly – Google’s indexing of our site was causing our server to make too many requests to YQL; fixed by changing caching model and switching to Oauth endpoint. Also I changed my code to ignore responses when the headers returned were: text/html;charset=UTF-8. The rate limit page thrown up by Yahoo! is HTML and not an XML response.

- Some places were pulled out of text when they were irrelevant – Copper Alloy, Tamil Nadu is one example. Fixed by creating a stop list

- geonames API sometimes takes a while to respond and made application hang – changed cUrl settings

- Took quite a long time to parse 400,000 records – can’t do much with that!

However, I’d really recommend using YQL to extract geodata for your application. Hopefully, Yahoo! will maintain YQL and Geo as integral parts of their business model…. In the future, I would love to run the British Museum collections data through these functions and see what cross-referencing I could find….

09/24/2010

The Scheme’s new website has been online now for 6 months and I’ve been looking at the performance and costs incurred during this period. We’ve had several large discoveries since the site went live – the Frome Hoard and the Crosby Garrett Roman Helmet for instance. However, they aren’t typical objects so we don’t get the big spikes in referral from large news aggregators or providers daily. I’m a little disappointed that web traffic hasn’t grown significantly since we went live with the new site, but we’re still getting a long period of activity/ pages viewed per visit. I’ve worked hard on search engine visibility (apart for a blip in July when I blocked all search engines via a typo in my robots.txt file – as the great Homer says, D’oh!) and we’re now seeing a surge in pages being added to Google’s index (nearly up to 50% of 400,000 publicly accessible pages now included according to webmaster tools).

Web statistics

All the web statistics are produced via Google Analytics, I haven’t bothered with the old logfile analysis. The old stats that we used to return for the DCMS and quoted in our annual reports were heavily reliant on ‘hits’, a metric I always hated. Some simple observations:

New functions

Since launch, we’ve released lots of new features, all based on Zend framework code:

- More extensive mining of theyworkforyou for Parliamentary data

- Heavy use of YQL throughout the website

- Flickr images pulled in

- Oauth YQL calls to make use of Yahoo! geo functions

- Created a load of YQL tables for Museum and heritage website API and opensearch modules

- Integrated Geoplanet’s data into database backend from their data dump

- Added old OS maps from the National Library of Scotland (these are great and easy to implement) to most of our maps, for example a search for ‘Sompting‘ axeheads and click on ‘historical’

- Integrated the Ordnance Survey 1:50K dataset for antiquities and Roman sites

- Integrated the English Heritage Scheduled Monuments dataset (only available to higher level users.)

- Pulled in data from Amazon for our references (prices, book cover art etc) for example ‘Toys, trifles and trinkets’ by Egan and Forsyth

- Mined the Guardian API for news relating to the Scheme

- Created functions for the public to record their own objects and find previously recorded ones easily. This has been quite well received, see Garry Crace’s article on how he found it.

- Used some semantic techniques (FOAF for example – our contacts page uses this in rdfa)

- Context switched formats for a wide array of pages across the site

- Got OAI access working

- Created extensive sitemaps for search indexing

Database statistics

Some raw statistics of progress with the new database can be seen below:

24601 records have been created which documents the discovery and recording of 94,978 objects (one hoard of coins adds 52,503 objects alone – so remove these and you get 42475 objects). We also released functions that allowed the public to record their own objects, and this has resulted in the addition of 740 records from 32 recorders. We expect this number to increase following the release of an instructional guide produced by our Kent FLA and FLO – (Jess Bryan and Jen Jackson).

Users

User accounts created: 855 with no spam accounts created so far.

- 2 Finds Adviser status

- 38 Finds Liaison Officer status

- 13 Historic Environment Officer status

- 745 ordinary members

- 57 Research status accounts

In the previous existence of our database over at findsdatabase.org.uk, we had 1135 accounts created in 7 years.

Research

58 new research projects have been added to our research register with the following levels of activity:

962,601 searches have been performed since relaunch. We’ve had 132 reports of incorrect data being published on our data (undoubtedly, there are more errors, people are just shy!) and 250-ish comments on records. These functions are both protected by reCaptchas and akismet and we’ve had 5 spam submissions in 6 months.

Contributors of data

943 new contributors have offered data for recording or become involved by recording or researching. I’m tidying up the database so that we can do better analysis of what people use our facility for. We now collect primary activity and postcodes, so that we can do some better statistical analysis.

Running costs for following domains:

www.finds.org.uk

www.findsdatabase.org.uk

www.staffordshirehoard.org.uk

www.pastexplorers.org.uk

Server farm hosting fee: £828

Bandwidth cost for excess load: £234

Remote backup space: £900 (350GB images)

Amazon S3 backup space: £0.27 ($0.42) for (11GB data transfer of MySQL backups)

Flickr licence: £15.30 ($24)

Get satisfaction account: £36.38 ($57) which I cancelled after 3 months due to the fact it was underused.

Development costs: Covered by my salary, not revealing that.

Total IT cost for running: £2013.95 (or around 8p per record or a more meaningless statistic because of the huge hoard, of circa 2p per object)

We plan to make this reduce further by switching backup to S3 for images as well or renegotiating with our excellent providers at Dedipower in Reading. Since the demise of Oxford ArchDigital, we’ve already made IT cost savings of c. £15,000 per annum in support fees and also all development work has been taken on in house.

Hopefully people are finding our new site much more useful, we’ve got more stuff to come…

09/14/2010

Now most detectorists will have heard of Sam Moorhead, the Roman Coin Advisor for the PAS and his quest for ‘grots’. Sam would love all the 4th century coins to be recorded as he realised that these, often worn and horrid, coins were not being reported and so the data was skewing our understanding of the rural occupation of Roman Britain.

Since Sam’s quest started metal detectorists have responded with gusto- to the point where some FLOs may wish Sam had not started on this quest! The number of coins on the PAS database has now increased dramatically and Philippa Walton (previously the FLO in the North East) will soon finish a PhD on the Roman coinage recorded on the PAS and how it has changed the picture we have of coin use across the country.

Like most FLOs I record plenty of Roman coins, since starting in 2007 I have recorded 919 and this is whilst working part time for 2 of those years- and in total there are 139,113 Roman coins on the database- so the volume of data is huge!

I had yet another fourth century coin- luckily not too worn- which I recorded as DUR-A93BA2 on the database. It is a copper alloy nummus, probably of Valentinian I (364-75), minted in Arles. Now we know it is minted in Arles because on the reverse, in the field around the figure, there are the letters OFIII. Both Lyons and Arles marked their coins to signify which workshop (oficina- OF) they were made in. However Lyons only had two workshops, whilst Arles has three. This is just a drop in the ocean in the vast knowledge of Roman coins but I thought it was worth a note on here as it is something which may help people narrow their coins down to one mint or the other!

08/27/2010

Flint is a very difficult thing to spot when out about in a field – well it is for me anyway. Oh don’t get me wrong, I can pick up bits of flint until the cows some home, but never ever have I managed to spot a worked piece! However, there are people out there with a sharp eye who manage to spot these fantastically crafted objects.

Quite often the flint I am given to to record onto the database are tools such as ESS-98FF03 a Mesolithic adze, ESS-388BD4 a Neolithic polished axe or this exquisite barbed and tanged arrow head ESS-7B4111.

Bronze Age arrowhead

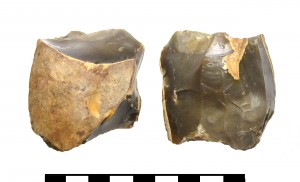

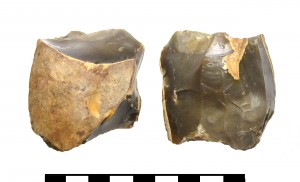

As interesting and beautiful as completed tools are, the main thing I have been working on this week is a load of old rubbish. In fact I have been recording and identifying Mesolithic rubbish from an assemblage found in the Colchester District, to be a little more precise. Although a few tools were brought in with the assemblage, the majority of the finds have been waste flakes (discarded fragments of knapped flint) and cores that have had flakes and blades removed from the surface; see ESS-CFD107, ESS-CFCDD4 and ESS-CFF7C4 for a few examples.

Bladelet core

These discarded waste flakes and cores were not needed or adapted into tools by prehistoric man, and they may not be as aesthetically pleasing to the modern eye as the completed tools, but they are vitally important to archaeologists. They help us to identify areas where knapping took place, which means we are identifying where the tools were actually being made. This can be really interesting, as flint can be a rare commodity in parts of the country, and tool production does not always take place at the source of the flint. All the ‘rubbish’ can actually be more informative than a discarded tool. So recording flint assemblages, or even only one or two pieces can help us to understand peoples interactions, use of the landscape and the materials available to them.

Flint is a wonderful thing, but it can also be tricky and misleading!! Not all of the flint brought into me for recording over the years has been worked by humans. The shaping of it can be entirely natural. A lot of smaller flint nodules can fit wonderfully well in the hand without having been worked at all. Even fractured flint nodules may be the result of the natural environment, such as frost fractured ‘pot lids’.

Given the right conditions (such as freezing and thawing) flint can break naturally in quite a regular looking way. This is because the structure of flint is like a large lattice or grid – so natural breaks will quite often be relatively straight or angular, which people can mistake as being man made due to their regularity.

It is this natural property of flint that made it such a great material to turn into tools. The lattice structure makes it relatively predictable as to where the breaks will form when pressure is applied, hence in prehistory we see a recognisable range of tools of similar design time and time again. These angular breaks are also exceptionally sharp – and some of the tools brought in for recording are still sharp today, thousands of years after they were made.

It can be tricky to spot some of the differences between natural fractures, damage caused by modern farming machinery and deliberate man made removals. If you bring a piece in for recording and it turns out to be natural breaks, rather than worked, you are still holding a piece of really old natural history in your hands that was formed millions of years ago!

Why don’t you check ESS-851F72, a fantastic example of natural history meeting human history – an incomplete Mezolithic Adze made from a flint nodule which is covered in a fossilised sponge.

08/24/2010

As promised back in July I have had another interesting swivel:

SUSS-101B44 is of a more typical form than the one I highlighted previously (SUSS-225F31). It has a small loop with animal heads at the ends biting a domed centre from which projects a circular pin. This is the ‘male’ half of a two part swivel, similar to SF-CA5816.

What is particularly interesting to me is the remains of strap ends attached on to the loop of the swivel. Their form means they were attached during manufacture and couldn’t be removed. I had always assumed the straps simply went through the loop of the swivel and rubbed directly on it. This solution, with the strap ends, both reduces the wear on the strap if it is moving a great deal and also allows two straps to be taken off the same swivel.

A quick search has not brought up any parallels although more diligent searching might bring some to light. It may be this was a particular response to the need to take two straps off one swivel or to using straps of a different, less hard-wearing material, a woven strip rather than leather perhaps. Again this item sheds new light on a common class of artefact and helps us understand them in a different way and perhaps highlights again that this is a class of artefact that would benefit from more research.